Load Balancer Testing with a Honeypot Daemon

This is a write up of a talk I originally gave at DevopsDays London in March 2013. I had a lot of positive comments about it, and people have asked me repeatedly to write it up.

Background

At a previous contract, my client had over the course of a few years outsourced quite a handful of services under many different domains. Our task was to move the previously outsourced services into our own datacentre as both a cost saving exercise and to recover flexibility that had been lost.

In moving all these services around, there evolved a load balancer configuration that consisted of

- Some hardware load balancers managed by the datacentre provider that mapped ports and also unwrapped SSL for a number of the domains. These were inflexible and couldn't cope with the number of domains and certificates we needed to manage.

- Puppet-managed software load balancers running

- Stunnel to unwrap SSL

- HAProxy as a primary load balancer

- nginx as a temporary measure for service migration, for example, dark launch

As you can imagine there were a lot of moving parts in this system, and something inevitably broke.

In our case, an innocuous-looking change passed through code review that broke transmission of accurate X-Forwarded-For headers. The access control to some of our services was relaxed for certain IP ranges as transmitted with X-Forwarded-For headers. Only a couple of days after the change went in we found the Googlebot had spidered some of our internal wiki pages! Not good! The lesson is obvious and important: you must write tests of your infrastructure.

Unit testing a load balancer

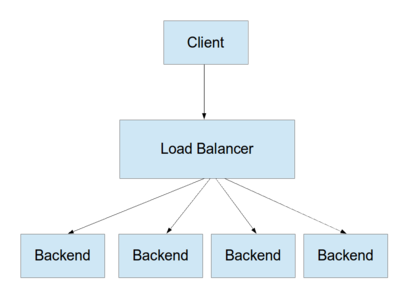

A load balancer is a network service that forwards incoming requests to one of a number of backend services:

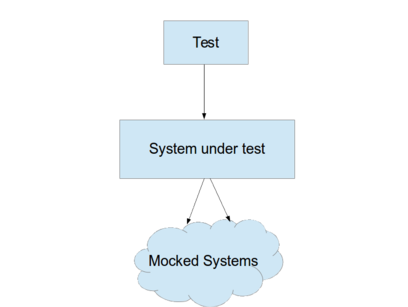

A pattern for unit testing is to substitute mock implementations for all components of a system except the unit under test. We can then verify the outputs for a range of given inputs.

To be able to unit test the Puppet recipes for the load balancers, we need to be able to create "mock" network services on arbitrary IPs and ports that the load balancer will communicate with, and which can respond with enough information for the test to check that the load balancer has forwarded each incoming request to the right host with the right headers included.

The first incarnation of tests was clumsy. It would spin up dozens of network interface aliases with various IPs, put a webservice behind those, then run the tests against the mock webservice. The most serious problem with this approach was that it required slight load balancer configuration changes so that the new services could come up cleanly. It also required tests to run as root to create the interface aliases and bind the low port numbers required. It was also slow. It only mocked the happy path, so tests could hit real services if there were problems with the load balancer configuration.

I spent some time researching whether it would be possible to run these mock network services without significantly altering the network stack of the machine under test. Was there any tooling around using promiscuous mode interfaces, perhaps? I soon discovered libdnet and from there Honeyd, and realised this would do exactly what I needed.

Mock services with honeyd

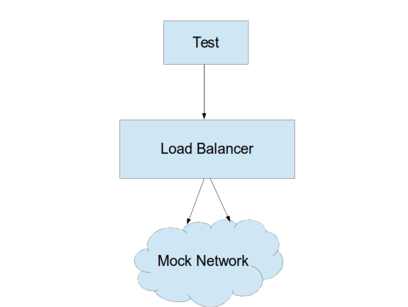

Honeyd is a service intended to create virtual servers on a network, which can respond to TCP requests etc, for network intrustion detection. It does all this by using promiscuous mode networking and raw sockets, so that it doesn't require changes to the host's real application-level network stack at all. The honeyd literature also pointed me in the direction of combining honeyd with farpd so that the mock servers can respond to ARP requests.

More complicated was that I needed to create scripts to create mock TCP services. I needed my mock services to send back HTTP headers, IPs, ports and SSL details so that the test could verify these were as expect. To create service Honeyd requires you to write programs that communicate on stdin and stdout as if these were the network socket (this is similar to inetd). While it is easy to write this for HTTP and a generic TCP socket, it's harder for HTTPS, as the SSL libraries will only wrap a single bi-directional file descriptor. I couldn't find a way of treating stdin and stdout as a single file descriptor. I eventually solved this by wrapping one end of a pipe with SSL and proxying the other end of the pipe to stdin and stdout. If anyone knows of a better solution for this, please let me know.

With these in place, I was able to create a honeyd configuration that reflected our real network:

# All machines we create will have these properties create base set base personality "Linux 2.4.18" set base uptime 1728650 set base maxfds 35 set base default tcp action reset # Create a standard webserver template clone webserver base add webserver tcp port 80 "/usr/share/honeyd/scripts/http.py --REMOTE_HOST $ipsrc --REMOTE_PORT $sport --PORT $dport --IP $ipdst --SSL no" # Network definition bind 10.0.1.3 webserver bind 10.0.1.11 webserver ...

This was all coupled an interface created with the Linux veth network driver (after trying a few other mock networking devices that didn't work). With Debian's ifup hooks, I was able to arrange it so that bringing up this network interface would start honeyd and farpd and configure routes so that the honeynet would be seen in prefence to the real network. There is a little subtlety in this, because we needed the real DNS servers to be visible, as the load balancer requires DNS to work. Running ifdown would restore everything to normal.

Writing the tests

The tests were then fairly simple BDD tests against the mocked load balancer, for example:

Feature: Load balance production services Scenario Outline: Path-based HTTP backend selection Given the load balancer is listening on port 8001 When I make a request for http://<domain><path> to the loadbalancer Then the backend host is <ip> And the path requested is <path> And the backend request contained a header Host: <domain> And the backend request contained a valid X-Forwarded-For header Examples: | domain | path | ip | | www.acme.mobi | / | 42.323.167.197 | | api.acmelabs.co.uk | /api/cfe/ | 10.0.1.11 | | api.acmelabs.co.uk | /api/ccg/ | 10.0.1.3 |

The honeyd backend is flexible and fast. Of course it was all Puppetised as a single Puppet module that added all the test support; the load balancer recipe was applied unmodified. While I set up as a virtual network device for use on development loadbalancer VMs, you could also deploy it on a real network, for example for continuous integration tests or for testing hardware network devices.

As I mentioned in my previous post, having written BDD tests like this it's easier to reason about the system, so the tests don't just catch errors (protecting against losing our vital X-Forwarded-For headers) but give an overview of the load balancer's functions that makes it easier to understand and adapt in a test-first way as services migrate. We were able to make changes faster and more confidently and ultimately complete the migration project swiftly and successfully.

Comments

Comments powered by Disqus